Perceive, Represent, Generate: Translating Multimodal Information to Robotic Motion Trajectories

Fábio Vital, Miguel Vasco, Alberto Sardinha, and 1 more author

In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022

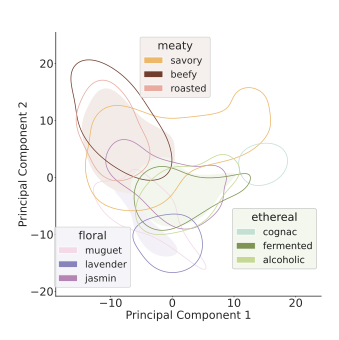

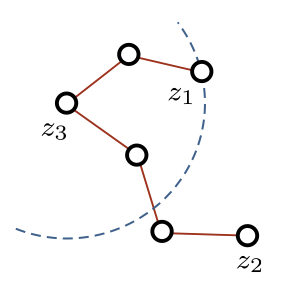

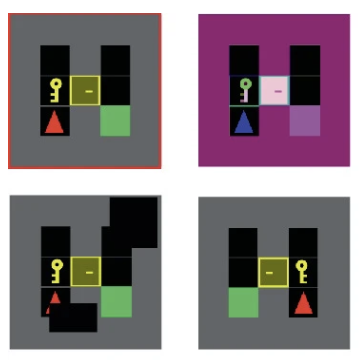

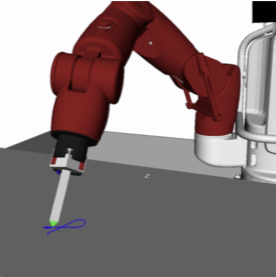

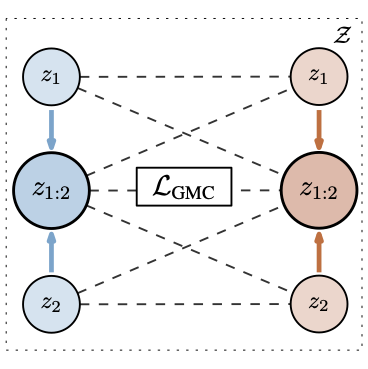

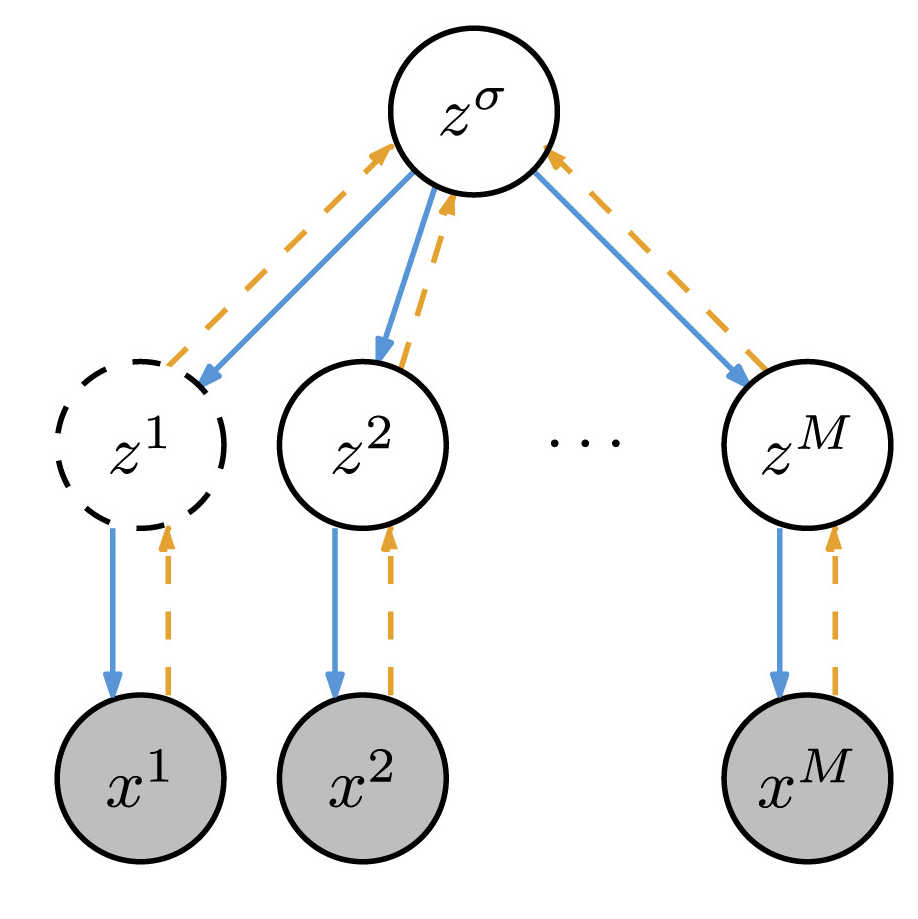

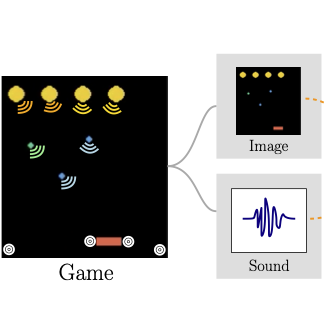

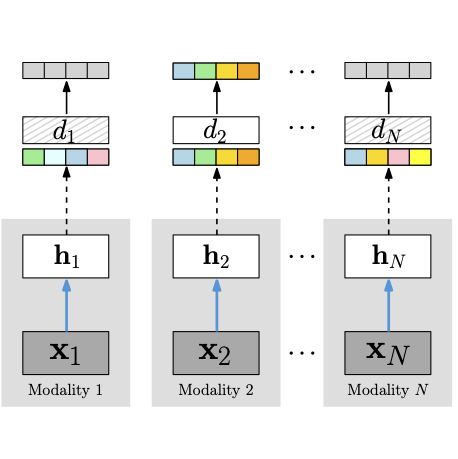

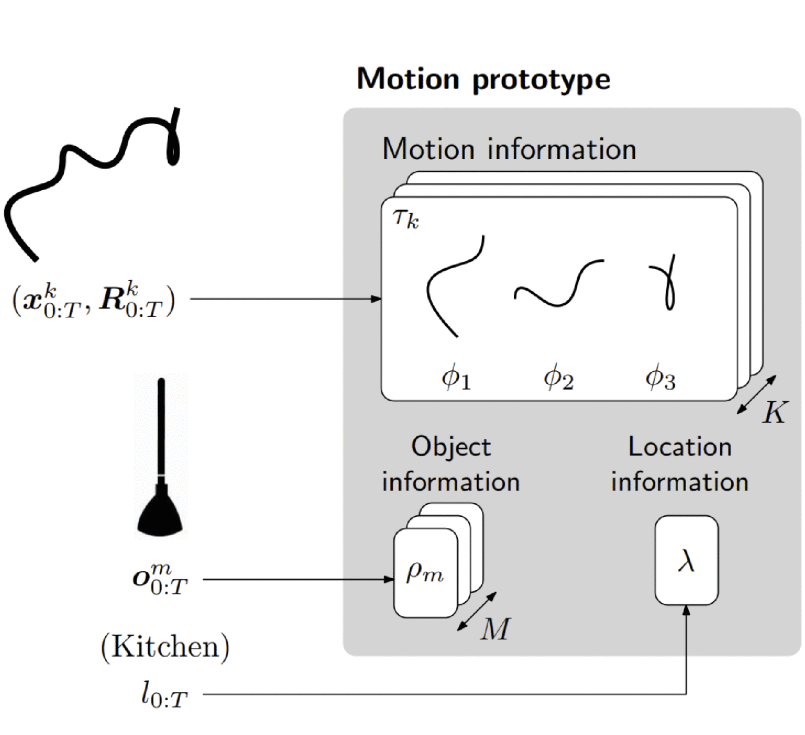

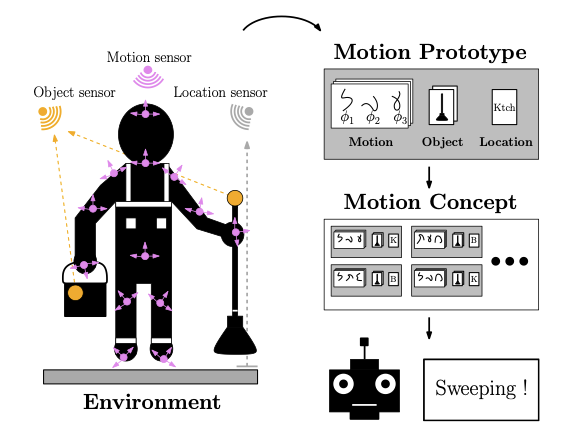

We present Perceive-Represent-Generate (PRG), a novel three-stage framework that maps perceptual information of different modalities (e.g., visual or sound), corresponding to a sequence of instructions, to an adequate sequence of movements to be executed by a robot. In the first stage, we perceive and pre-process the given inputs, isolating individual commands from the complete instruction provided by a human user. In the second stage we encode the individual commands into a multimodal latent space, employing a deep generative model. Finally, in the third stage we convert the multimodal latent values into individual trajectories and combine them into a single dynamic movement primitive, allowing its execution in a robotic platform. We evaluate our pipeline in the context of a novel robotic handwriting task, where the robot receives as input a word through different perceptual modalities (e.g., image, sound), and generates the corresponding motion trajectory to write it, creating coherent and readable handwritten words.

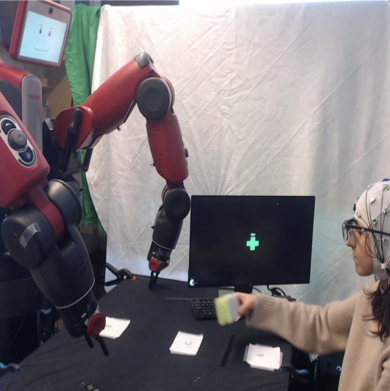

Explainable Agency by Revealing Suboptimality in Child-Robot Learning ScenariosIn International Conference on Social Robotics (ICSR), 2020

Explainable Agency by Revealing Suboptimality in Child-Robot Learning ScenariosIn International Conference on Social Robotics (ICSR), 2020